Holographic AR: Not Your Grandfather's Holography

In the real world, when we see an object, it’s actually our eyes receiving light reflecting off that object—a wavefront of light. The wavefront incorporates complex information that our eyes can interpret about brightness, color, and distance (phase) properties of the light waves, which enable us to perceive the object in three dimensions (3D).

By contrast, when we look at a display screen, we see light that is being emitted from a two-dimensional plane by the individual pixels of the display (even if the pixels are too small for our eyes to perceive them). Holograms aim to replicate the real-world effect of light being reflected off an object. Essentially, today’s holograms consist of a computer-generated replica of a wavefront projected from a display screen or onto a transparent panel, using an interference pattern to mimic the real-world wavefront from an object—making 2D projections appear 3D (an autostereoscopic approach).

In a past blog post, we discussed the history and technology of holograms. In the early days of holograms, photographic plates with a special coating were used to record amplitude and phase information of the wavefront to create an image. Today, computers and displays are used to generate holographic projections. A typical computer-generated hologram is calculated by algorithms and projected using a spatial light modulator.1 While some augmented reality (AR) systems use display screens such as OLED that emit images or clear panels that reflect a projected image, advanced holographic techniques are an emerging as an AR visualization approach with mass-market potential.

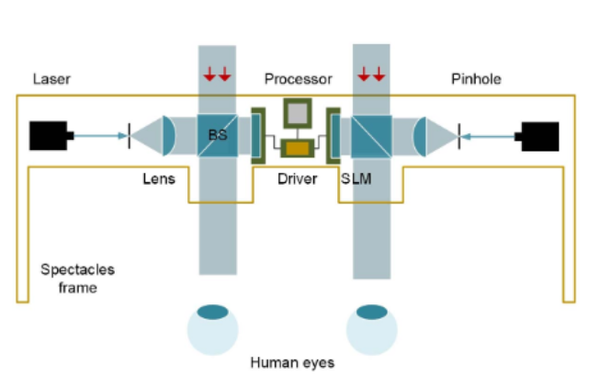

Diagram of an AR device based on computer-generated holographic display (CGH). The CGH is uploaded onto a spatial light modulator, diffracted light under the illumination of the reference light reaches the human eyes through one direction of a beam splitter, and the real environment enters human eyes through another direction of the beam splitter, to form a combined image of the background environment with AR images. (Image: Source)

Traditional AR/VR devices are based on a binocular vision display or light field display, both of which can suffer from vergence-accommodation conflicts that lead to user dizziness or fatigue. Holographic displays offer 3D visual perception without creating a convergence-focusing conflict in the viewer, mitigating these negative user effects.

Adoption of AR outside of industrial/enterprise applications has been slow, in part due to these physical effects. Additional lackluster interest in widespread consumer adoption of smart glasses and AR devices has been attributed to the discomfort of wearing a head-mounted device (HMD) for an extended period, and style concerns over bulky or unattractive smart glasses designs. Most consumers still rely on their smart phones for on-the-go information.

Advancing the Science of Holography While Improving User Experience

Producing static holographic 3D images is a starting point, but a more compelling AR experience is necessary to drive more market interest. Researchers and companies keep pushing the technology forward pursuing more interactive, immersive, and headset-free augmented reality and holographic methods to enhance the experience.

For example, at the SPIE AR VR MR Conference 2020, researchers from the University of Illinois at Urbana-Champaign presented a holographic method for an AR near-eye display system based on the projection of a digital amplitude-only computer-generated hologram (AO-CGH) on a digital micromirror device (DMD).2 The operating principle for the device is based upon Fresnel holography and spatial light modulator encodings, enabling the system to display a high-resolution 3D image with accurate depth cues. They suggest this technique can be used for a compact, low-cost device that could be appealing for consumer applications. Learn more…

Here is a glimpse at some further areas of holographic innovation—interactivity, immersion, and headset-free systems—that could help overcome consumer AR adoption barriers and change how we interact with technology in the future.

Interactive Holograms

You may have seen Tony Stark in the Iron Man and Avengers movies interacting with his giant AR displays, swiping mid-air images in and out of view with a wave of his hand. Now researchers are getting closer to realizing that capability, but they’re starting small, with finger gestures.

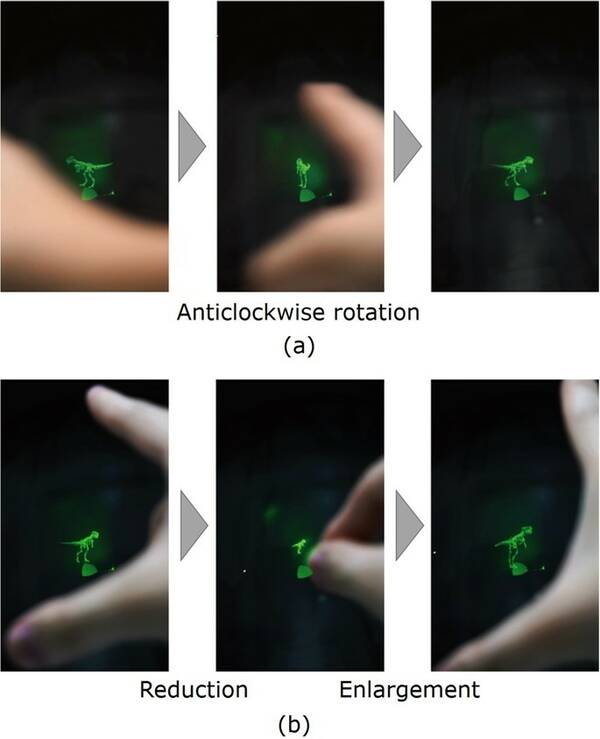

A team at Chiba University in China have developed an “interactive, finger-sensitive system which enables an observer to intuitively handle electro-holographic images in real time. In this system, a motion sensor detects finger gestures (swiping and pinching) and translates them into the rotation and enlargement/reduction of the holographic image, respectively.”3

Images showing an operator (a) rotating a 3D image using a swiping gesture and (b) reducing and enlarging the image using pinching gestures. The image of Tyrannosaurus Rex consists of 4,096 point light sources. (Image: Source)

Headset-Free AR Experiences

Recent developments are pushing holography innovation further, particularly in the realm AR. The next generation of holographic displays—variously referred to as holographic interfaces, light field displays, superstereoscopic displays, or volumetric displays4—will allow users for the first time to break free from wearing hardware such as a headset or smart glasses because images are projected into real space. Not only does this enable a single user to view holograms unencumbered, but enables multiple users to experience the same hologram at the same time, from multiple 3D angles.

These new systems mean that “groups of people can now interact with virtual characters and worlds without the friction of gearing up, delivering on the promise of a holographic future long promised in science fiction.”5 The potential applications of this headset-free breakthrough is vast, with predictions that “nearly every industry—medical imaging, communication, 3D design, advertising, the memories industry, entertainment, drug design, education—will be transformed by this shift over the coming few years.”6 The automotive industry is also applying AR techniques for head-up display (HUD) systems in vehicles.

An AR system lets an architect preview a building design in 3D…but the rest of his team can’t see the image without headsets. New holographic interfaces will be viewable by all to enhance collaboration. (Image: Looking Glass)

Holographic AR

Another area of innovation in holography is termed “Holographic Augmented Reality,” not to be confused with simply using holography to project objects or images into the user’s current environment (with or without headsets). Holographic AR refers to a more immersive experience, where the user inserts themselves into an interactive environment. “Holographic Augmented Reality extracts people and/or objects from the real world, in real time, and immerses them into the virtual environment where they can interact with digital elements naturally. In other words, Holographic AR allows users to see themselves in the virtual world and interact with objects found within the same space.”7

A key aspect of Holographic AR is its integration of body movements—hand gestures, facial expressions and even voice commands—to control the experience, rather than requiring any hand-held controller device. This type of organic user tracking is called a Natural User Interface (NUI), which includes the work cited above using finger gestures.

Holographic AR has significant potential for educational purposes, for example, immersing a student in an environment they are learning about and enabling them to virtually explore and interact with that environment.

A student can visit Mars and have a close-up experience interacting with the Curiosity Rover. (Image: Integem)

Not Your Grandfather’s Holography

One company that’s on the forefront of hologram technology is VividQ, a Cambridge University-based start-up that is working to create consumer mass-market holographic AR displays. The title of this blog post is borrowed from them (with permission).

One challenge for any type of interactive or immersive holography is the computational load involved. Traditional hologram calculation is not scalable to the massive point clouds involved in generating images that can move and change in real time. VividQ has developed a layer-based FFT (Fast Fourier Transform) algorithm with an LCoS panel, and applied additional methods to speed up processing capabilities. In simple terms, an LCoS display has a set of apertures that light passes through, enabling the system to control the interference pattern to generate a high-resolution image in full color.

The market for HMD AR devices has so far been limited due to challenges such as bulky form factors, eye strain and overheating after prolonged use. New Holographic AR approaches are helping to remove these barriers, smoothing the way to mass consumer adoption.

CITATIONS

- He, Z., et al., “Progress in virtual reality and augmented reality based on holographic display”, Applied Optics, Vol. 58(5), February 10, 2019.

- Chang, C., et al., "Holographic near-eye 3D display based on amplitude-only wavefront modulation", Proc. SPIE 11310, Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR), 113100V, February 19, 2020. https://doi.org/10.1117/12.2544452

- Yamada, S., et al., “Interactive Holographic Display Based on Finger Gestures”, Scientific Reports 8, 2010 (2018), January 31, 2018. https://doi.org/10.1038/s41598-018-20454-6

- Frayne, S., “Holograms, VR, AR, & the future of reality”, Looking Glass Blog, September 17, 2019.

- Ibid.

- Ibid.

- “What is Holographic AR”, Integem (retrieved May 7, 2020).

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List