Blast From the Past: Virtual Reality

Virtual and augmented reality (VR and AR) technologies are already revolutionizing aspects of everyday life, from consumer entertainment to medical care, retail, military operations, transportation, and more. As we move into this new virtual-enabled future, we can gain perspective by remembering where the industry has come from. We hope you enjoy these highlights from the history of virtual reality devices.

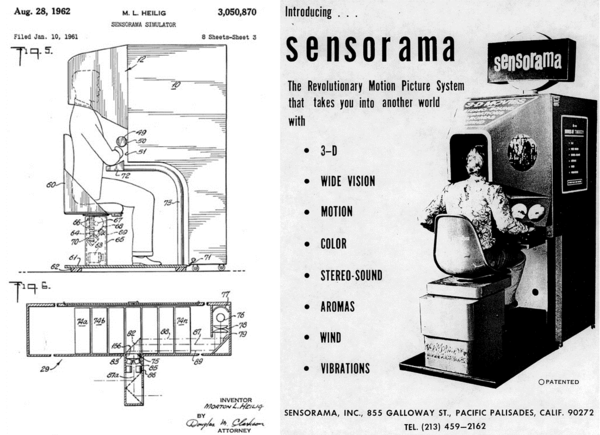

Sensorama. In 1955, filmmaker Morton Heilig described his vision of a multi-sensory theater experience. "If we're going to step through the window into another world," he said, "why not go the whole way?" He built a prototype and in 1962 introduced Sensorama (U.S. Patent #3,050,870). It was a mechanical device that displayed stereoscopic 3-D images in a wide-angle view and incorporated body tilting, stereo sound, wind, and aromas. Heilig—sometimes called the Father of Virtual Reality—created short films for the device to display including a bicycle ride through Brooklyn. However, Sensorama failed to catch on in the movie industry. Learn more…

Headsight. Motion tracking was the next innovation. In 1961 researchers at Philco constructed a head-mounted display (HMD) that enabled a wearer to observe another room. The device and used magnetic tracking to monitor a user’s head movements. It had a video screen for each eye that displayed live video from a closed-circuit camera mounted remotely in the other room, which rotated to match the head movements of the wearer. Some people contend that the Headsight was the first VR HMD, but it lacked integration with a computer or any image generation feature.

The Sword of Damocles. The next breakthrough in AR/VR HMDs was the Sword of Damocles, created 1968 by computer scientist Ivan Sullivan (who authored a seminal paper on VR called The Ultimate Display, and is widely regarded as “the father of computer graphics”) with the help of his then-student at Harvard, Bob Sproull (who went on to an illustrious career in academia, at Sun Microsystems, and in government scientific advisory roles).

The device was named after the Greek tale of the sword hanging over the head of Damocles. So-called because Sullivan and Sproull’s device was so heavy that it had to be anchored from the ceiling to alleviate the weight on the person wearing the headset. Watch the Sword in action:

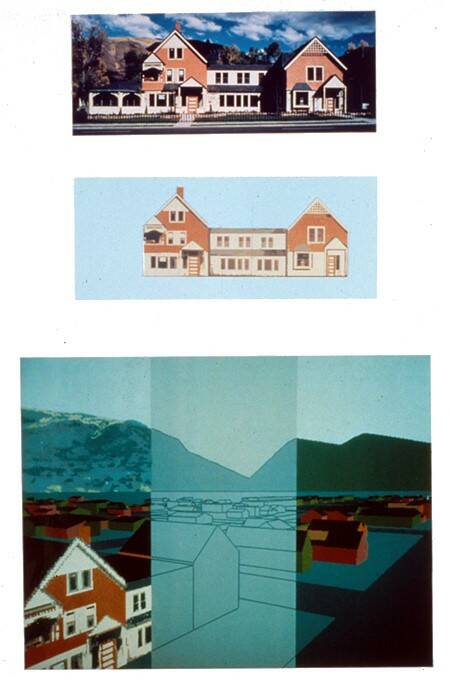

Aspen Movie Map. Today’s popular Google Earth VR application had its early precursor as well. In 1978, Andy Lippman and a team from MIT develop a travel application that allowed the user to enjoy a simulated ride through Aspen, Colorado. To create the experience, the team mounted a gyroscopic stabilizer with four 16mm stop-frame film cameras on top of a car and drove it down the middle of every street in Aspen. An encoder triggered the camera to take images every 10 feet, with front, back and side views. The film was then assembled into a collection of discontinuous scenes, correlated to a two-dimensional street plan. When a user chose a route through the city, the related images were presented—although their path was limited to 10-foot steps taken in the center of the street, seen at one of the four orthogonal views. Learn more...

Aspen’s building facades were texture-mapped onto 3D models, then translated to 2D screen coordinates for a database of buildings. [Image from Creative Commons BY-SA 3.0]

VPL Research. VPL Research, founded by Jason Lanier in 1984, was among the first companies to build and sell commercially-viable VR products. VPL stands for “virtual programming languages,” which Lanier develop for programming VR applications. Lanier is also credited with coining the term “virtual reality.” Among the devices produced by VPL were:

- The EyePhone (not to be confused with… well, you know) was an HMD designed to immerse users in a computer simulation, with two LCD screens that provided a slightly different image to create the illusion of depth. It tracked the user’s head movements, and also used Fresnel lenses, which are still in some VR devices today.

- The Data Glove was wired with micro-controllers and fiber-optic bundles to track movement and orientation. Data was fed to a computer, allowing a user to manipulate virtual objects. Wired gloves and other integrated manual control mechanisms for AR/VR continue to be an area of development today.

VPL Research founder Jason Lanier introduces the Data Glove

The Sega VR Headset. If you don’t remember playing with a Sega VR Headset back in 1994, there’s a good reason for that: it was never released. The device had LCD screens in the visor, stereo headphones, and used inertial sensors in the headset that allowed the system to track and react to the wearer’s movements. But rumor has it that issues with motion sickness and user discomfort contributed to Sega’s decision to pull the product.

The human eye is a sensitive and finely tuned mechanism. Latency (time lag) of even hundredths of milliseconds between a user’s head movement and the visual feedback of corresponding movement in a headset’s display image can cause physical symptoms like nausea and headaches. As recently as 2013, achieving low latency was being called “the final challenge” for mass-market-ready VR.

What’s Next…? Advancements in AR/VR have moved rapidly in the last five years (let alone the last 55!). To make the technology work effectively for the applications of today and tomorrow, designers are still grappling with some of the same challenges as these early inventors, such as head tracking, image latency, and optimizing the user’s immersive experience.

Something that can help support the quality and advancement of modern AR/VR devices is an accurate measurement solution for HMD displays. Leveraging a history of display test experience and innovation, Radiant developed a new AR/VR Lens that offers a unique optical design specially engineered for measuring near-eye displays (NEDs), such as those integrated into virtual (VR), mixed (MR), and augmented reality (AR) headsets.

The AR/VR Lens captures the full field of view (FOV) of the display (up to 120° horizontal, covering approximate human binocular FOV). Featuring a front-integrated aperture, the lens is able to simulate the size, position, and field of view of the human eye. Paired with a Radiant ProMetric® 16- or 29-megapixel imaging photometer or colorimeter, the AR/VR lens captures high-resolution images of displays to detect both large-area and pixel-level defects and anomalies in LED, LCD, OLED, and projection displays. Standard tests in Radiant's TrueTest™ Software for display testing include luminance, chromaticity, contrast, uniformity, mura (blemishes), pixel and line defects, and more. Additional specialized tests for AR/VR display analysis include:

- Modulation transfer function (MTF) to evaluate image clarity. Based on Slant Edge Contrast ISO 12233.

- Image Distortion (for distortion characterization)

- Image Retention/Sticking

- Factory distortion calibration of the lens solution to normalize wide field-of-view images for testing

- Spatial x,y position reported in degrees (°)

To learn more about Radiant’s AR/VR Lens solution and its benefits for HMD evaluation, view the webinar: “Replicating Human Vision for Accurate Testing of AR/VR Displays.”

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List