Human Factors Considerations for AR/VR Display Quality

Augmented, virtual, and mixed reality (AR/VR/MR) devices and other head-mounted displays (HMDs) are unique in the display industry as they are intended to be viewed at very close range. Unlike televisions seen from across a room, or smartphones held at arm’s length, these appropriately named Near-Eye Displays or Near-to-Eye Displays (NEDs) are typically positioned a mere 1.2 - 3 inches from the user’s eye.

As a result of this viewing proximity, any defects or lack of clarity in the display image can be glaringly obvious. Additionally, display performance issues such as slow frame rate, slow pixel refresh rate, or distortion can negatively impact the user experience. This is especially true with VR devices that fill the wearer’s entire field of view and thus don’t provide any visual cues to help orient a user to the real-world environment.

Example of how frame rate can affect image quality. The refresh rate is the number of times a display refreshes to show a new image, in units of frequency Hz (hertz). Therefore, 60 Hz means the display refreshes 60 times per second to show a new image. Frame rate and refresh rate combine to determine the quality of fast-moving images. (Image: © Display Ninja, Source)

The impact of various display quality and performance issues goes beyond user dissatisfaction with the visual experience—these issues can also cause physical discomfort such as dizziness and motion sickness. The phenomenon, called virtual reality sickness, can include “nausea, dizziness/lack of balance, drowsiness, warmth, sweating, headaches, disorientation, eye strain, and vomiting. Studies have shown that participants in a VR experience can feel ill up to several hours after taking off their VR headsets.”1 These “human factors” challenges are critical for device designers to address and for manufacturers to be able to detect once AR/VR/MR hardware goes into production.

Human Factors Considerations

From the early days of AR/VR, developers have grappled with various user experience issues. The human eye and human sensory systems are finely tuned instruments with exquisite sensitivity to the tiniest inconsistencies, so designing hardware systems has been an ongoing challenge. Common human factors issues for AR/VR/MR systems include:

- Inconsistent cues from the visual and vestibular sensory systems

- Motion that is “felt” but not “seen”

- Motion that is “seen” but not “felt”

- Both systems detect motion, but they do not correspond

- Visual experiences

- “Screen-door” effects due to “low” display resolution (high pixel pitch)

- Field of view (FOV) limitations

- Motion artifacts due to “low” display frame rates

- System/ergonomic issues

- Weight comfort, tether, eye relief, etc.

- Power dissipation, battery life, etc.

- Tracking setup vs. “inside-out” tracking, 3 degrees of freedom (DOF) vs. 6 DOF

- Inconsistent oculomotor (depth perception) cues

- Eye accommodation/convergence mismatch

- Incorrect focus/blur cues

- Missing or inconsistent proprioception cues (perception of the body’s orientation in space) 2

Visual images from a VR headset must match other sensory cues the user receives from their environment.

One particularly challenging aspect of human optical/neurological processing is how we perceive depth in our surroundings. Oculomotor cues are based on sensing the position of our eyes and muscular tension, both involving functions of our eyeball: convergence (vergence) and accommodation.

Vergence and Accommodation

While many of the demands placed on system engineers are being effectively met with today’s AR/VR/MR devices, challenges remain. “Many characteristics of near-eye displays that define the quality of an experience, such as resolution, refresh rate, contrast, and field of view, have been significantly improved in recent years. However, a significant source of visual discomfort prevails: the vergence-accommodation conflict (VAC), which results from the fact that vergence cues (e.g., the relative rotation of the eyeballs in their sockets), but not focus cues (e.g., deformation of the crystalline lenses in the eyes), are simulated in near-eye display systems. Indeed, natural focus cues are not supported by any existing near-eye display.” 3

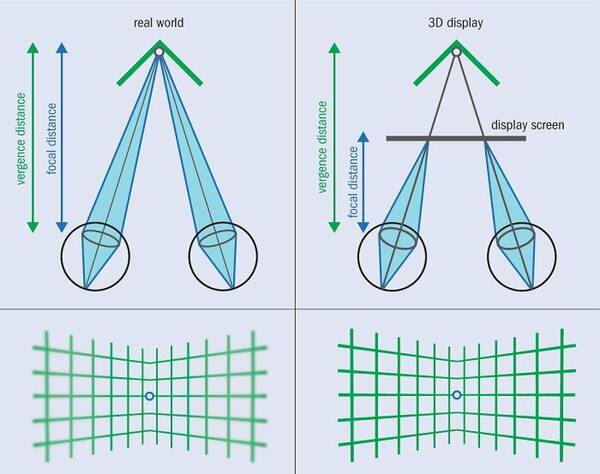

Essentially, convergence (vergence) is an inward movement of the eyes to focus on an object: when the focal points of both eyes converge. Accommodation is an adjustment in the shape of the lens to focus on objects at different distances. In the real world, if you are focused on an object at a certain distance, then your eyes are both verged and accommodating to the same distance. The conflict arises with AR/VR/MR devices because typically the display screen is located at one physical distance from the wearer’s eye but the projected images are shown as if they were farther in the distance, so the vergence and accommodation distances do not match.

“When you look at an object in the real world (left), the vergence distance (that is, the distance to the place where your eyes are pointing) and focal distance are identical, and objects away from the focus [point] appear blurred. (right) In a typical stereoscopic 3D display, however, your eyes focus on the display screen, but may point at a different location, and all objects appear in focus.” 4 (Image © Physics World)

At the recent Society for Information Display (SID) virtual Display Week 2020 symposium, several technical presentations focused on advancements toward addressing the technical and optical challenges of AR/VR, including new progress in resolving VAC issues. For example:

- Scientists at UC Berkeley Center for Innovation in Vision and Optics determined that objective measurement (vs. subjective) of best focus distance can introduce error (lags & leads), and human accommodation is more accurate than previously thought.5

- Facebook researchers developed a method to quantify a “Zone of Clear Vision,” which defines the magnitude of VAC that a user can tolerate before perceptible impact on image quality.6

- The National Taiwan University of Science and Technology presented research on how to reduce motion sickness with texture blur, using a first-person shooting VR game for their study.7

Ensuring Visual Quality of AR/VR/MR Devices

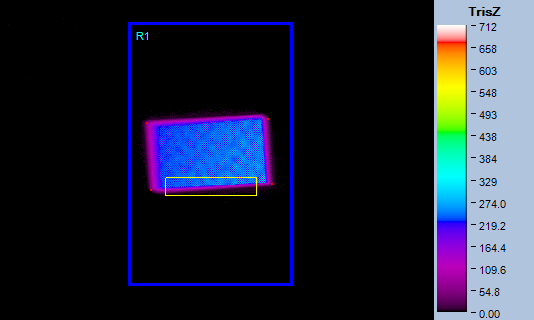

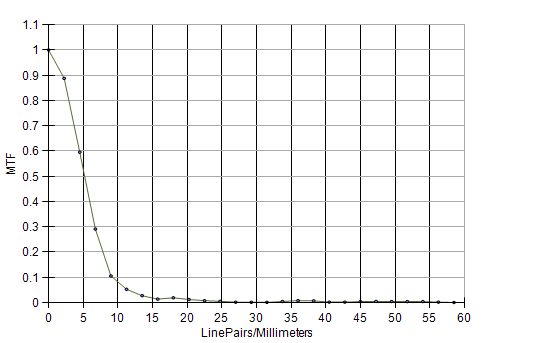

Radiant has developed a solution for measuring AR/VR/MR devices, with unique optical components specially engineered for NEDs. A high-resolution ProMetric® Imaging Colorimeter paired with the Radiant AR/VR Lens and TT-ARVR™ Software enables rapid, automated visual inspection of displays within AR/VR headsets. Using this solution, all visual display characteristics can be tested simultaneously in a single image, capturing up to 120° H by 80° V field of view. The system can measure display characteristics including luminance, chromaticity, contrast, focus, MTF, distortion, field of view, and more.

The AR/VR Lens design simulates the size, position, and field of view of the human eye. Unlike alternative lens options, where the aperture is located inside the lens, the aperture of the AR/VR Lens is located on the front of the lens. This enables positioning of the imaging system’s entrance pupil within NED headsets to view HMDs at the same location as the human eye and capture the full field of view as seen by the user.

Modulation transfer function (MTF) is calculated in TT-ARVR Software to determine overall image clarity. MTF Slant Edge, MTF Line Pair, and Radiant’s unique Line Spread Function (LSF) analysis can be used for the most accurate result. MTF Slant Edge analysis is shown in the images above.

Watch this brief product demonstration to see how the Radiant AR/VR Lens solution can evaluate the visual quality of displays in headsets from the vantage point of the user, with the most accurate and efficient measurement for labs or production lines.

In this video, you will learn about:

- Radiant’s complete solution for in-headset AR/VR display testing

- TT-ARVR software test suite for visual display inspection based on brightness, color, contrast, MTF, focus uniformity, distortion, field of view, and more

- Using the unique AR/VR Lens to replicate the size, position, and field of view of the human pupil within headsets and smart glasses

CITATIONS

- “Virtual reality sickness (VR motion sickness)”, TechTarget. (Retrieved 8/20/2020)

- Bhowmik, A., “Fundamentals of Virtual- and Augmented-Reality Technologies”, Short Course delivered for Society for Information Display, May 20, 2018 [bullet points]

- Wetzstein, G., “Computational Near-Eye Displays: Engineering the Interface to the Digital World”, Chapter 3 in Frontiers of Engineering: Reports on Leading-Edge Engineering from the 2016 Symposium, National Academy of Engineering. 2017. Washington, DC: The National Academies Press. https://doi.org/10.17226/23659.

- Harris, M., “A better virtual experience”, Physics World, May 1, 2018.

- Banks, M., Human Factors in Virtual and Augmented Reality”, presented at SID Display Week symposium, August 5, 2020.

- Erkelens, I., “Vergence-Accommodation Conflicts in Augmented Reality: Impacts on Perceived Image Quality”, presented at SID Display Week symposium, August 5, 2020.

- Tsai, T., “Research on Reducing Motion Sickness in First-Person Shooting VR Game with Texture Blur”, presented at SID Display Week symposium, August 5, 2020.

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List