New 3D Sensing and Novel Use Cases Expand the NIR Application Landscape

Three-dimensional (3D) sensing is the technology of depth-sensing. It goes beyond the capabilities of traditional camera or imaging systems, which are designed to capture a two-dimensional (flat) image. By contrast, 3D sensing is able to measure and capture the height, width, depth, and shape of an object to more closely replicate how humans see the world.

The technology has caught on in recent years, with widespread application in biometric identification (such as facial and gesture recognition), security and surveillance, and automotive lidar (light detection and ranging), among others, with growing demand and new use cases emerging almost daily. In fact, the global market for 3D sensing technology is projected to grow 12% CAGR to reach more than $2.1 billion by 2026.1

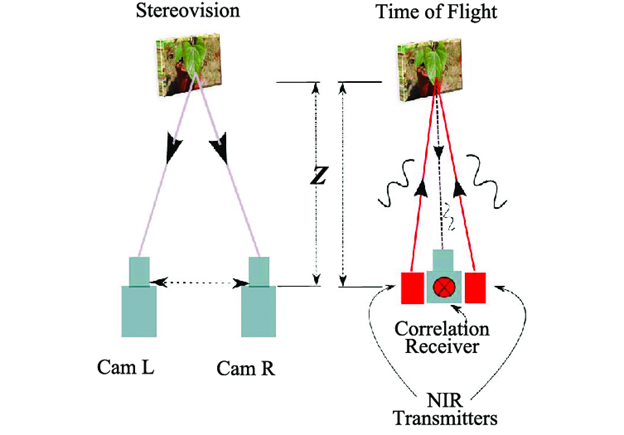

While some smartphones have long offered 3D sensing, this feature previously relied solely on stereoscopic technology using visible or nonvisible imaging. Stereoscopy captures a scene using two cameras placed a small distance apart to capture dual images from different vantage points. Combining these images closely resembles human visual depth perception, which relies on our brain merging the two slightly different views captured by each of our eyes.

New forms of 3D sensing rely on reflections of light, which convey 3D and depth information in terms of reflection time and distortion, instead of visual cues. These technologies project an invisible (near-infrared, or NIR) beam of light towards a scene or an object (such as a human face) and then measure the reflection of the wavefront as it bounces off different parts of the object, using that data to calculate the shape of the object. The Apple iPhone® X is the first model to incorporate structured patterns of NIR laser light for 3D sensing. Common methods of NIR-based 3D sensing include:

- Time of Flight (ToF)—the time it takes for light to bounce back from an object is used to measure the distance of that object

- Structured light—a small filter called a diffractive optical element (DOE) splits a single beam of laser light into multiple tiny beams that form a dot pattern projected onto the object. The reflection of that pattern is distorted by the shape of the object, which enables detailed measurement—mapping—of the 3D contours of the object.

Comparison of stereoscopic depth sensing using two cameras vs. NIR-based sensing systems such as Time of Flight (TOF) which uses reflected light to capture the distance of an object. (Image Source, as adapted2)

The NIR light used for 3D sensing can come from several sources:

- LEDs(light-emitting diodes)

- Edge-Emitting Lasers (EELs)

- Vertical-Cavity Surface-Emitting Lasers (VCSELs). The market for VCSELs alone is projected to reach $1.8 billion in 2021, driven by both 3D sensing use and in future by integration into features of 5G devices.3 Rapid VCSEL adoption over other NIR sources is due to the VCSEL’s technical simplicity, narrow spectrum, and stable temperatures.

VCSELs are gaining rapid acceptance as the technology of choice for a wide range of 3D sensing applications, from facial recognition that unlocks your smartphone, to autonomous vehicle lidar, and other applications. In this post, we’ll take a look at a few emerging applications for NIR VCSEL technology.

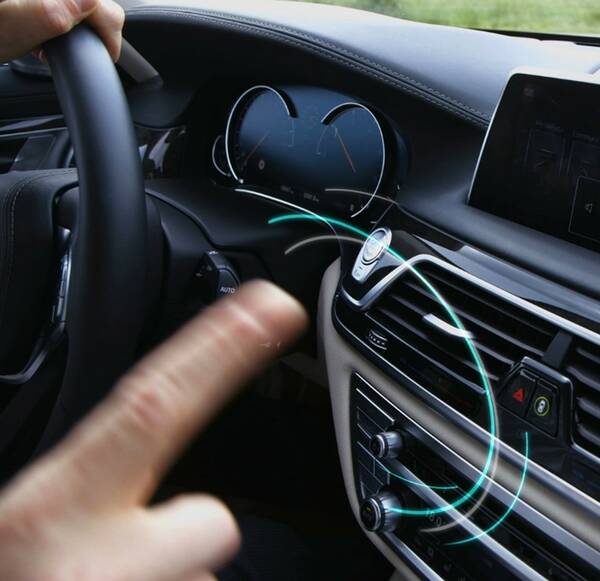

3D sensing applications for the automobile include both exterior object mapping and interior driver safety monitoring and control features, such as a gesture-activated infotainment panel system shown here from Sony’s extensive portfolio of depth-sensing products. (Image © Sony)

Lidar for Mapping Exterior and Interior Environments

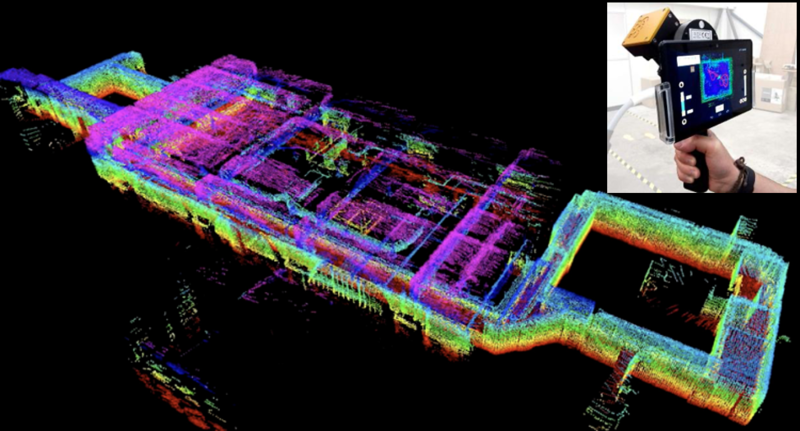

Lidar was first developed and used as a technique to map the contours of landscapes and monitor meteorological conditions. It was then taken up by the automotive industry for advanced driver-assistance systems (ADAS). Now it’s being brought indoors as a method to map architectural spaces. This application is especially valuable for emergency personnel who may have to enter a burning building or collapsed structure where visibility is low. Having a 3D digital map of a building interior can help first responders navigate through the structure and save lives. The National Institute of Standards and Technology (NIST) has been working on this under its Point Cloud City program, helping develop a handheld lidar scanning device that can map an entire building in just a few hours.

Information gathered from lidar scanners for indoor 3D maps could be used to point emergency responders to critical locations such as emergency exits, fire extinguishers, or utility shutoffs. Inset: A portable lidar scanner that can create 3D maps of indoor locations in a matter of a few hours. (Images Source: NIST)

Drones and Logistics

Drones are becoming more common, used for everything from remote law enforcement surveillance to delivering Amazon packages. To enable not just remote but automated operation of drones, companies have developed drone systems that combine 3D sensors, software, and algorithms. For example, drones may combine ToF, lidar, stereo vision, and ultrasonic sensors so they can operate on autonomous flight modes with collision avoidance systems. Drones with advanced 3D sensing capabilities can now be applied to a wide variety of tasks including:4

- Indoor Navigation

- Gesture Recognition

- Object Scanning

- Collision Avoidance

- Object Tracking

- Volume Measurement

- Surveillance of a Target Zone

- Count Objects or People

- Fast, Precise Distance-to-Target Readings

- Augmented Reality/Virtual Reality

- Object Size and Shape Estimation

- Enhanced 3D Photography

Infrared and NIR Medical Applications

The sun bathes our planet in a full spectrum of visible and invisible electromagnetic wavelengths. Some of these wavelengths can be harmful to humans (with certain harmful wavelengths at least partially blocked by our protective atmosphere). For example, infrared and NIR light shone directly into the human eye can cause retinal damage. But new research is discovering ways that these higher-frequency wavelengths can be beneficial as well.

Taking patient vital signs and monitoring various medical metrics has always required some type of close, in-person contact. For example, a nurse must physically put a blood pressure cuff on a patient to take a reading, and patients in the hospital may have multiple with electrical sensors adhered to their skin for ongoing monitoring of heartrate and other critical metrics. Now NIR light may provide remote and non-invasive ways to obtain patient data—a boon in these times of COVID-19 distancing, while hospital staff is stretched thin.

New research has shown that cameras that are spectrally filtered for the NIR range can estimate heart rate, heart rate variation, and other metrics. Because oxygenated and deoxygenated hemoglobin have different spectral characteristics under NIR light, a patient’s oxygen saturation level can be measured with NIR cameras. Researchers in Germany have combined NIR, hyper-red, and NIR cameras with other elements to create a safe, multi-function medical monitoring system.5

A camera system for the fast acquisition of 3D patient data in the visible and near-infrared (NIR) range is shown: the top row of apertures are the hyper-red camera, RGB camera, and NIR camera, and the bottom row apertures are the fast IR camera, GOBO pattern projector, and fast IR camera.(Image Credit: FSU Jena, Maximilian Hausmann, Source)

Additionally, recent research on animals has shown that red and NIR light reduce respiratory disorders that are similar to those complications associated with coronavirus infection. Moreover, in human patients, red light has been shown to alleviate chronic obstructive lung disease and bronchial asthma.6

Measuring NIR Emitters for Accuracy

Makers of NIR LEDs and VCSELs—light sources used for 3D sensing applications—must ensure the accuracy of these light sources that are being used for so many vital functions. Because NIR sensing systems are used around and frequently directed towards humans, ensuring that the wavelengths meet intended specifications is critical for safety and performance.

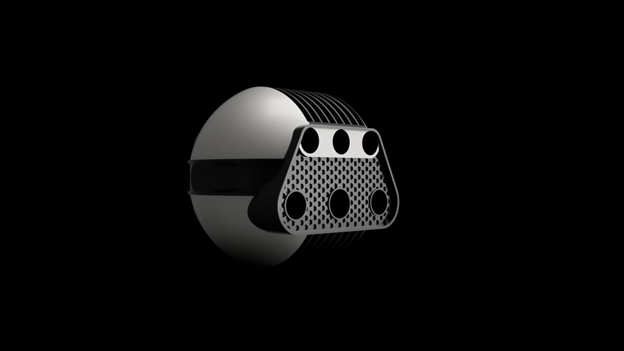

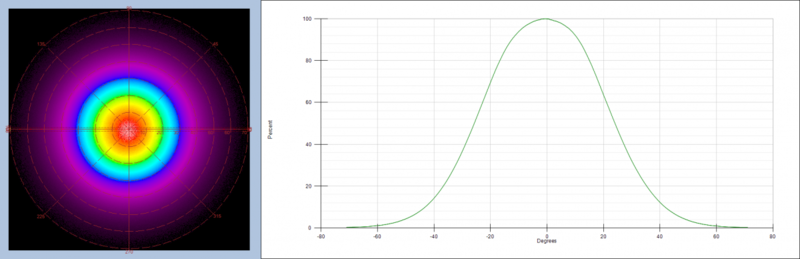

Radiant offers an NIR measurement solution specially designed for use in the lab and on the production line to ensure consistency and quality of these emitters. The Near-Infrared (NIR) Intensity Lens system is an integrated camera/lens solution that measures the angular distribution and radiant intensity of 850 or 940 nm near-infrared emitters. The solution uses Fourier optics to capture a full cone of data in a single measurement to ±70 degrees, giving manufacturers extremely fast, accurate results ideal for in-line quality control.

Radiant’s NIR Intensity Lens, winner of the Gold Innovators Award from Laser Focus World and Silver Innovators Award from Vision Systems Design in 2019.

The NIR Lens is integrated with a ProMetric® Y16 Imaging Radiometer and specialized software to analyze the radiant intensity and uniformity of NIR emissions, including structured-light dot patterns, flood source NIR LEDs typically used for ToF measurements, and more. Part of our TrueTest™ family of automated inspection software, TT-NIRI™ provides a comprehensive NIR test suite that allows users to define measurement parameters and pass/fail criteria for specific points of interest in an image. The software module includes specific tests for NIR light source measurement including:

|

|

Cross-section radar plot in TT-NIRI software showing radiant intensity as a function of angle for an NIR LED light distribution.

To learn more about the NIR Intensity Lens solution, read our white paper, “Measuring Near-Infrared (NIR) Light Sources for Effective 3D Facial Recognition,” which discusses performance considerations for NIR emitters, techniques for measuring NIR sensing devices that use structured light and ToF, and more.

CITATIONS

- Global 3D Sensing Technology Market Report, as reported by MarketWatch, “3D Sensing Technology Market 2020 Global Industry Size, Share, Revenue, Business Growth, Demand and Applications Market Research Report to 2026”, Nov 5, 2020.

- Kazmi, We., et al., “Indoor and outdoor depth imaging of leaves with time-of-flight and stereo vision sensors: Analysis and comparison,” ISPRS Journal of Photogrammetry and Remote Sensing, 88:128-146, January 2014. DOI 10.1016/j.isprsjprs.2013.11.012

- 2020 Infrared Sensing Application Market Trend-Mobile 3D Sensing, LiDAR and Driver Monitoring System, report from Trendforce, as reported in “Total VCSEL Revenue Expected to Reach US$1.8 Billion in 2021 Owing to Integration of 3D Sensing and 5G, Says TrendForce”, LEDInside, September 1, 2020.

- “3D Sensing—New Ways of Sensing the Environment”, FutureBridge. (Retrieved November 24, 2020)

- Heist, S., et al. “Detectors & Imaging: Fast 3D imaging for industrial and healthcare applications,” Laser Focus World, December 17, 2019.

- Enwemeka, C., et al. “Light as a potential treatment for pandemic coronavirus infections: A perspective”, Journal of Photochemistry and Photobiology B: Biology, Vol 207, June 2020. DOI: https://doi.org/10.1016/j.jphotobiol.2020.111891

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List