With a Wave of Your Hand: 3D Gesture Recognition Systems

How cool would be to make things happen by just waving our hands in the air—like Iron Man commanding his network of artificial intelligence-enabled systems? It’s like the ultimate personal IoT. Well, gesture recognition and IT technologies may not have reached the level of science-fiction movies quite yet, but they’re getting there.

In fact, the market for gesture recognition technologies is expected to grow by 22.2% CAGR to become a $30.6 billion global industry by 2025.1 The automotive industry in particular is helping drive this growth, thanks to the potential benefits of gesture-controlled systems to enhance user experience, reduce driver workload, and increase vehicle safety.

Gestures are a natural and intuitive part of our human communication and expression. Thus, being able to use gestures to communicate with devices in our environment requires very little additional intellectual processing—we can do it almost without thinking. The benefit of using gestures while driving, for example, is that gesturing takes minimal attention away from other activities. By contrast, interacting with a touch screen display requires drivers to take their eyes off the road to make precise, on-screen selections.

BMW’s Series 7 introduced gesture recognition capabilities in 2016. Drivers can “turn up or turn down the volume, accept or reject a phone call, and change the angle of the multicamera view. There's even a customizable two-finger gesture that you can program”2 to whatever command you want—from “navigate home” to “order a pizza”.

Automotive component manufacturer Continental now offers gesture-recognition-capable systems integrated with the center display, instrument cluster, and steering wheel, enabling drivers to control functions with just a swipe of their hand or motion of their fingers. These systems, like many gesture recognition devices, use near-infrared (NIR) light in the 850-940 nm range, applying structured light and time of flight (TOF) methods.

Beyond the automobile industry, other promising applications of gesture recognition include healthcare (enabling sterile, hands-free computer interfacing in the operating room, for example), manufacturing, sign-language recognition, and assistive technology for people with reduced mobility.

Gesture Acquisition Technologies

Gesture recognition involves integrating multiple elements. The first step is to “acquire” the gesture—to capture human movement in a way that it can be processed. Gesture acquisition can be accomplished via device-based systems (for example, a glove controller worn by a user) or vision-based systems, which use some type of camera. Visual input systems can use various different technologies, including RGB, 3D/depth sensing, or thermal imaging.

The field of computerized hand-gesture recognition emerged in the early 1980s with the development of wired gloves that integrated sensors on the finger joints, called data gloves. Simultaneously, visual image-based recognition systems were emerging that relied on reading color panels affixed to gloves.3

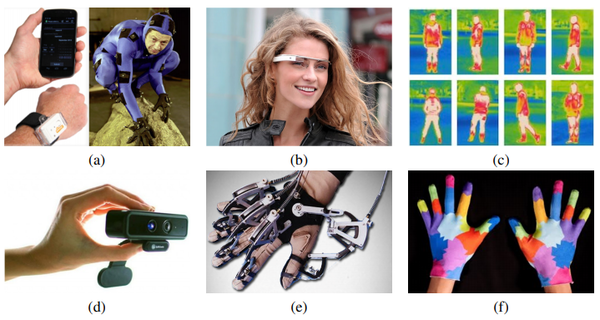

Examples of acquisition devices for gesture recognition: (a) left: mobile phone with GPD and accelerometer, right: inertial sensors with accelerometer and gyroscope are attached to a suit worn by Andy Serkis to create the CGI character of Gollum in The Lord of the Rings movies, (b) Google Glass for “egocentric” computing, (c) thermal imagery for action recognition, (d) audio-RGB-depth device (e) active glove and (f) passive glove4 (Image Source)

The first mass-market gesture recognition product was the Microsoft Kinect, a motion sensor add-on for the Xbox® gaming system. It used an RGB-color, VGA video camera, a depth sensor, and a multi-array microphone to capture and respond to player actions. While the consumer product has phased out, the motion sensing platform is still available to developers under the name Azure Kinect.

Wired or controller-based gesture capture systems are still in widespread use, however, interest in “touchless” technology is taking off.1 In settings like the driver seat of a vehicle and hospital operating rooms, the benefits of not having to touch a device are significant.

Visual Image Acquisition, Step by Step

Essentially, gesture recognition is the mathematical representation of human gestures via computing devices. It requires a sophisticated sequence of processes to capture, interpret, and respond to human input. Marxent Labs outlines four key steps:

Step 1. A camera captures image data and feeds it into a sensing device that’s connected to a computer.

Step 2. Specially designed software identifies meaningful gestures from a predetermined gesture library where each gesture is matched to a computer command.

Step 3. The software then correlates each real-time gesture, interprets the gesture, and uses the library to identify meaningful gestures that match the library.

Step 4. Once the gesture has been interpreted, the computer executes the command correlated to that specific gesture.

Lumentum describes the key element of the imaging system that would be used in Step 1:

Illumination Source – LEDs or laser diodes typically generating infrared or near-infrared light. "This light isn’t normally noticeable to users and is often optically modulated to improve the resolution performance of the system.”5 Some systems use a combination of 2D color camera plus 3D sensing (NIR) light source and camera.

Controlling optics —Optical lenses help illuminate the environment and focus reflected light onto the detector surface. A bandpass filter lets only reflected light that matches the illuminating light frequency (e.g., 940 nm) reach the light sensor, eliminating ambient and other stray light that would degrade performance.

Depth camera — A high performance optical receiver detects the reflected, filtered NIR light, turning it into an electrical signal for processing.

Firmware — Very high-speed ASIC or DSP chips (also called gesture recognition Integrated Chips, or ICs) process the received information and turn it into a format which can be understood by the end-user application such as video game software.

Image Processing Complexity

Even with all the system components involved, capturing the images for analysis (Step 1) is perhaps the easiest aspect of making gesture recognition systems work. Interpreting the information into a workable human-computer interaction model is a far bigger challenge (Steps 2 and 3). Unlike facial recognition, which simply matches a static captured pattern to a stored static pattern, gesture recognition requires complex analysis of dynamic movement.

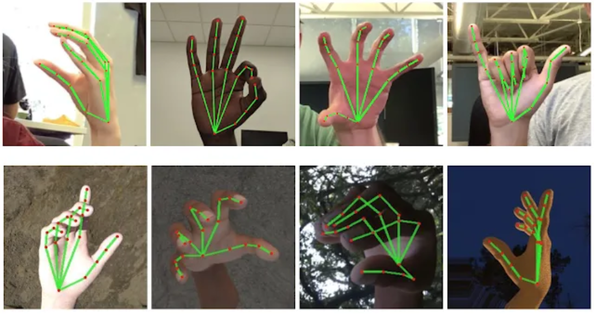

For analysis purposes, hand gestures can be broken down into multiple elements: the hand configuration (hand shape), its orientation, its location (position), and its movement through space. Gestures can also be crudely categorized into static (in which the hand holds one “posture”) and dynamic (in which the hand posture changes as it moves).

Skeletal-type hand gesture recognition images using Google’s open source developer algorithm, which provides real-time gesture recognition tools using a smart phone. (Image Source: Mashable)

To assess, categorize, and interpret a range of human gestures is a complex, interdisciplinary task, combining aspects of computer vision and graphics, image processing, motion modeling, motion analysis, pattern recognition, machine learning techniques, bio-informatics, and even psycholinguistic analysis.6,7 Different approaches have been tried and combined to build workable computerized gesture classification models, such as neural network (NN), time-delay neural network (TDNN), support vector machine (SVM), hidden Markov model (HMM), deep neural network (DNN), and dynamic time warping (DTW), among others.8

Gesture Recognition in Use

Despite these challenges, companies are developing successful hand-gesture recognition systems that are already in use today in sectors from gaming to medicine. For example, Microsoft worked with Novartis to develop an innovative system for assessing progressive functioning in patients with multiple sclerosis. In virtual reality systems, hand and gesture tracking enables users to interact with virtual objects.

Leap Motion makes a sensor that detects hand and finger motions. Besides using it to control your PC, it also performs hand tracking in virtual reality, allowing users to interact with virtual objects.

Powered by Near-Infrared (NIR) Sensing

Much of the successful hand gesture recognition technology systems in use today rely on NIR light—which is invisible to humans—to illuminate the motion of a human user. NIR light supports depth measurement and 3D sensing functions that use structured light and/or TOF approaches to generate input data. To ensure the accuracy of NIR-based gesture recognition systems, NIR light sources (typically LED or laser emitters like VCSELs) must operate correctly. Manufacturers of hand-gesture systems need to verify performance, ensuring that the illumination sources are emitting NIR light at an intensity that is sufficient for the application to work and safe for human exposure.

Radiant provides an NIR Intensity Lens solution for accurate measurement and characterization of NIR emitters, such as those used in gesture recognition, facial recognition, and eye tracking applications. The lens, combined with a ProMetric® Imaging Photometer and TrueTest™ software, is a complete solution for NIR light source measurement. For facial and gesture recognition applications, the TT-NIRI™ software module of TrueTest includes specific tests for NIR light source measurement including:

|

|

Both facial and gesture recognition systems use the same 3D sensing approaches of structured light (dot patterns) and TOF measurement. Learn more about testing NIR emissions of sources used in human-centered technology by reading our white paper: Measuring Near-Infrared (NIR) Light Sources for Effective 3D Facial Recognition.

CITATIONS

- Gesture Recognition Market Size, Share & Trends Analysis Report By Technlogy (Touch-based, Touchless), By Industry (Automotive, Consumer Electronics, Healthcare), and Segment Forecasts, 2019-2015, Grand View Research, January 2019.

- “Wendorf, M., “How Gesture Recognition Will Change Our Relationship With Tech Devices”, Interesting Engineering, March 31, 2019.

- “Historical Development of Hand Gesture Recognition”, Chapt. 2 in Premaratne, P., Human Computer Interaction Using Hand Gestures. Cognitive Science and Technology, Springer Science+Business Media Singapore 2014. DOI 10.1007/978-981-4585-69-9_2.

- Escalera, S., Athitsos, V., and Guyon, I., “Challenges in multimodal gesture recognition”, Journal of Machine Learning Research, Vol 17 (2016), pages 1-54.

- 3D Sensing/Gesture Recognition and Lumentum, White Paper published on Lumentum.com, 2016.

- Wu, Y., and Huang, T., “Vision-Based Gesture Recognition: A Review”.

- Sarkar, A., Sanyal, G. and Majumder, S., “Hand Gesture Recognition Systems: A Survey”, International Journal of Computer Applications (0975 – 8887), Volume 71, No. 15, May 2013.

- “Cicirelli, G. and D’Orazio, T., “Gesture Recognition by Using Depth Data: Comparison of Different Methodologies”, Motion Tracking and Gesture Recognition (Gonzalez, C. Editor), July 12, 2017. DOI: 10.5772/68118

Join Mailing List

Stay up to date on our latest products, blog content, and events.

Join our Mailing List